排查分片容量健康问题

编辑排查分片容量健康问题

编辑Elasticsearch 使用 cluster.max_shards_per_node 和 cluster.max_shards_per_node.frozen 设置限制每个节点可以容纳的最大分片数。集群的当前分片容量可在 健康 API 分片容量部分 中查看。

集群接近达到为数据节点配置的最大分片数。

编辑cluster.max_shards_per_node 集群设置限制了集群中打开的分片最大数量,仅计算不属于冻结层的数据节点。

此症状表明应采取措施,否则,创建新索引或升级集群可能会被阻止。

如果您确信您的更改不会使集群不稳定,则可以使用 集群更新设置 API 临时提高限制。

使用 Kibana

- 登录 Elastic Cloud 控制台。

-

在 Elasticsearch 服务 面板中,点击您的部署名称。

如果您的部署名称被禁用,您的 Kibana 实例可能不健康,在这种情况下,请联系 Elastic 支持。如果您的部署不包含 Kibana,您只需 先启用它。

-

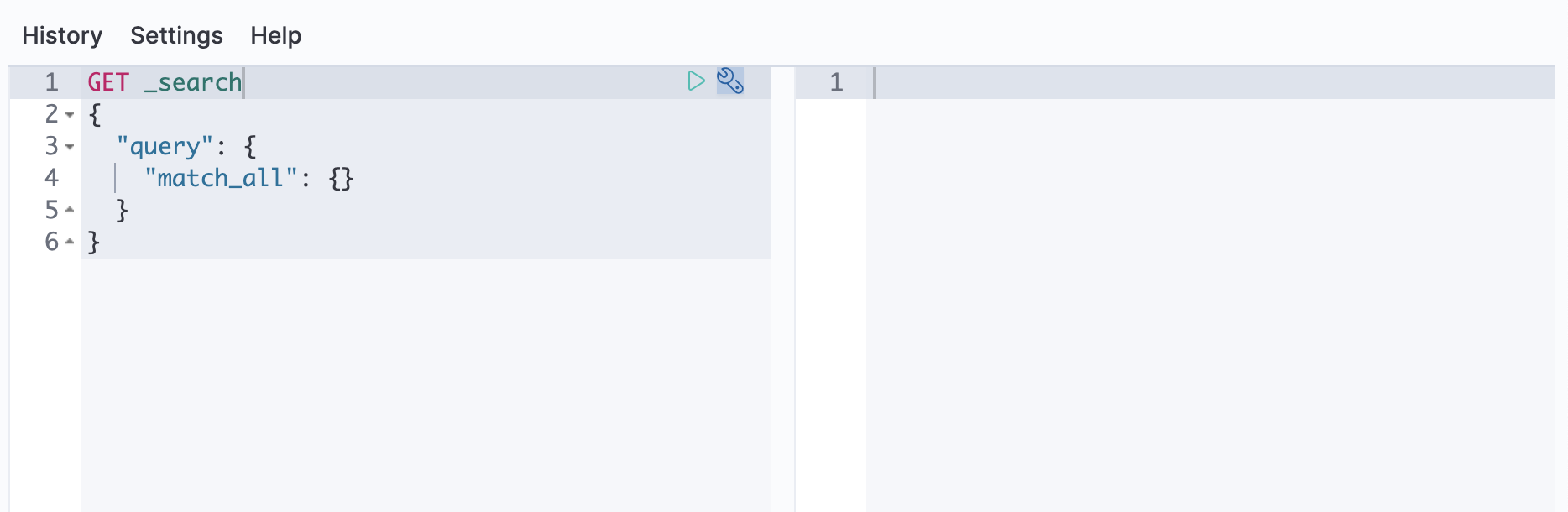

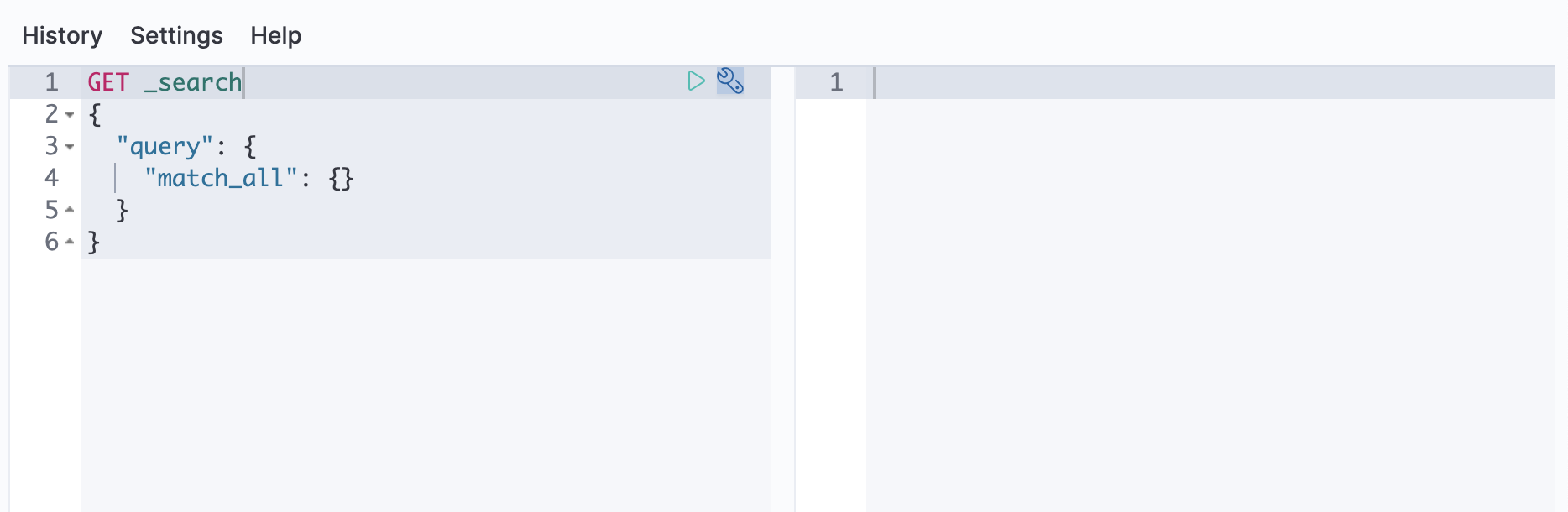

打开部署的侧边导航菜单(位于左上角的 Elastic 徽标下方),然后转到 Dev Tools > Console。

-

根据分片容量指示器检查集群的当前状态

resp = client.health_report( feature="shards_capacity", ) print(resp)response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({ feature: "shards_capacity", }); console.log(response);GET _health_report/shards_capacity

响应将如下所示

{ "cluster_name": "...", "indicators": { "shards_capacity": { "status": "yellow", "symptom": "Cluster is close to reaching the configured maximum number of shards for data nodes.", "details": { "data": { "max_shards_in_cluster": 1000, "current_used_shards": 988 }, "frozen": { "max_shards_in_cluster": 3000, "current_used_shards": 0 } }, "impacts": [ ... ], "diagnosis": [ ... } } } -

使用适当的值更新

cluster.max_shards_per_node设置resp = client.cluster.put_settings( persistent={ "cluster.max_shards_per_node": 1200 }, ) print(resp)response = client.cluster.put_settings( body: { persistent: { 'cluster.max_shards_per_node' => 1200 } } ) puts responseconst response = await client.cluster.putSettings({ persistent: { "cluster.max_shards_per_node": 1200, }, }); console.log(response);PUT _cluster/settings { "persistent" : { "cluster.max_shards_per_node": 1200 } }此增加应该只是临时的。作为长期解决方案,我们建议您向分片过多的数据层添加节点或 减少不属于冻结层节点上的集群分片数量。

-

要验证更改是否已解决问题,您可以通过检查 健康 API 的

data部分来获取shards_capacity指示器的当前状态resp = client.health_report( feature="shards_capacity", ) print(resp)response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({ feature: "shards_capacity", }); console.log(response);GET _health_report/shards_capacity

响应将如下所示

{ "cluster_name": "...", "indicators": { "shards_capacity": { "status": "green", "symptom": "The cluster has enough room to add new shards.", "details": { "data": { "max_shards_in_cluster": 1000 }, "frozen": { "max_shards_in_cluster": 3000 } } } } } -

当长期解决方案到位时,我们建议您重置

cluster.max_shards_per_node限制。resp = client.cluster.put_settings( persistent={ "cluster.max_shards_per_node": None }, ) print(resp)response = client.cluster.put_settings( body: { persistent: { 'cluster.max_shards_per_node' => nil } } ) puts responseconst response = await client.cluster.putSettings({ persistent: { "cluster.max_shards_per_node": null, }, }); console.log(response);PUT _cluster/settings { "persistent" : { "cluster.max_shards_per_node": null } }

根据分片容量指示器检查集群的当前状态

resp = client.health_report(

feature="shards_capacity",

)

print(resp)

response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({

feature: "shards_capacity",

});

console.log(response);

GET _health_report/shards_capacity

响应将如下所示

{

"cluster_name": "...",

"indicators": {

"shards_capacity": {

"status": "yellow",

"symptom": "Cluster is close to reaching the configured maximum number of shards for data nodes.",

"details": {

"data": {

"max_shards_in_cluster": 1000,

"current_used_shards": 988

},

"frozen": {

"max_shards_in_cluster": 3000

}

},

"impacts": [

...

],

"diagnosis": [

...

}

}

}

使用 集群设置 API 更新 cluster.max_shards_per_node 设置

resp = client.cluster.put_settings(

persistent={

"cluster.max_shards_per_node": 1200

},

)

print(resp)

response = client.cluster.put_settings(

body: {

persistent: {

'cluster.max_shards_per_node' => 1200

}

}

)

puts response

const response = await client.cluster.putSettings({

persistent: {

"cluster.max_shards_per_node": 1200,

},

});

console.log(response);

PUT _cluster/settings

{

"persistent" : {

"cluster.max_shards_per_node": 1200

}

}

此增加应该只是临时的。作为长期解决方案,我们建议您向分片过多的数据层添加节点或 减少不属于冻结层节点上的集群分片数量。要验证更改是否已解决问题,您可以通过检查 健康 API 的 data 部分来获取 shards_capacity 指示器的当前状态

resp = client.health_report(

feature="shards_capacity",

)

print(resp)

response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({

feature: "shards_capacity",

});

console.log(response);

GET _health_report/shards_capacity

响应将如下所示

{

"cluster_name": "...",

"indicators": {

"shards_capacity": {

"status": "green",

"symptom": "The cluster has enough room to add new shards.",

"details": {

"data": {

"max_shards_in_cluster": 1200

},

"frozen": {

"max_shards_in_cluster": 3000

}

}

}

}

}

当长期解决方案到位时,我们建议您重置 cluster.max_shards_per_node 限制。

resp = client.cluster.put_settings(

persistent={

"cluster.max_shards_per_node": None

},

)

print(resp)

response = client.cluster.put_settings(

body: {

persistent: {

'cluster.max_shards_per_node' => nil

}

}

)

puts response

const response = await client.cluster.putSettings({

persistent: {

"cluster.max_shards_per_node": null,

},

});

console.log(response);

PUT _cluster/settings

{

"persistent" : {

"cluster.max_shards_per_node": null

}

}

集群接近达到为冻结节点配置的最大分片数。

编辑cluster.max_shards_per_node.frozen 集群设置限制了集群中打开的分片最大数量,仅计算属于冻结层的数据节点。

此症状表明应采取措施,否则,创建新索引或升级集群可能会被阻止。

如果您确信您的更改不会使集群不稳定,则可以使用 集群更新设置 API 临时提高限制。

使用 Kibana

- 登录 Elastic Cloud 控制台。

-

在 Elasticsearch 服务 面板中,点击您的部署名称。

如果您的部署名称被禁用,您的 Kibana 实例可能不健康,在这种情况下,请联系 Elastic 支持。如果您的部署不包含 Kibana,您只需 先启用它。

-

打开部署的侧边导航菜单(位于左上角的 Elastic 徽标下方),然后转到 Dev Tools > Console。

-

根据分片容量指示器检查集群的当前状态

resp = client.health_report( feature="shards_capacity", ) print(resp)response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({ feature: "shards_capacity", }); console.log(response);GET _health_report/shards_capacity

响应将如下所示

{ "cluster_name": "...", "indicators": { "shards_capacity": { "status": "yellow", "symptom": "Cluster is close to reaching the configured maximum number of shards for frozen nodes.", "details": { "data": { "max_shards_in_cluster": 1000 }, "frozen": { "max_shards_in_cluster": 3000, "current_used_shards": 2998 } }, "impacts": [ ... ], "diagnosis": [ ... } } } -

更新

cluster.max_shards_per_node.frozen设置resp = client.cluster.put_settings( persistent={ "cluster.max_shards_per_node.frozen": 3200 }, ) print(resp)response = client.cluster.put_settings( body: { persistent: { 'cluster.max_shards_per_node.frozen' => 3200 } } ) puts responseconst response = await client.cluster.putSettings({ persistent: { "cluster.max_shards_per_node.frozen": 3200, }, }); console.log(response);PUT _cluster/settings { "persistent" : { "cluster.max_shards_per_node.frozen": 3200 } }此增加应该只是临时的。作为长期解决方案,我们建议您向分片过多的数据层添加节点或 减少属于冻结层节点上的集群分片数量。

-

要验证更改是否已解决问题,您可以通过检查 健康 API 的

data部分来获取shards_capacity指示器的当前状态resp = client.health_report( feature="shards_capacity", ) print(resp)response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({ feature: "shards_capacity", }); console.log(response);GET _health_report/shards_capacity

响应将如下所示

{ "cluster_name": "...", "indicators": { "shards_capacity": { "status": "green", "symptom": "The cluster has enough room to add new shards.", "details": { "data": { "max_shards_in_cluster": 1000 }, "frozen": { "max_shards_in_cluster": 3200 } } } } } -

当长期解决方案到位时,我们建议您重置

cluster.max_shards_per_node.frozen限制。resp = client.cluster.put_settings( persistent={ "cluster.max_shards_per_node.frozen": None }, ) print(resp)response = client.cluster.put_settings( body: { persistent: { 'cluster.max_shards_per_node.frozen' => nil } } ) puts responseconst response = await client.cluster.putSettings({ persistent: { "cluster.max_shards_per_node.frozen": null, }, }); console.log(response);PUT _cluster/settings { "persistent" : { "cluster.max_shards_per_node.frozen": null } }

根据分片容量指示器检查集群的当前状态

resp = client.health_report(

feature="shards_capacity",

)

print(resp)

response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({

feature: "shards_capacity",

});

console.log(response);

GET _health_report/shards_capacity

{

"cluster_name": "...",

"indicators": {

"shards_capacity": {

"status": "yellow",

"symptom": "Cluster is close to reaching the configured maximum number of shards for frozen nodes.",

"details": {

"data": {

"max_shards_in_cluster": 1000

},

"frozen": {

"max_shards_in_cluster": 3000,

"current_used_shards": 2998

}

},

"impacts": [

...

],

"diagnosis": [

...

}

}

}

使用 集群设置 API 更新 cluster.max_shards_per_node.frozen 设置

resp = client.cluster.put_settings(

persistent={

"cluster.max_shards_per_node.frozen": 3200

},

)

print(resp)

response = client.cluster.put_settings(

body: {

persistent: {

'cluster.max_shards_per_node.frozen' => 3200

}

}

)

puts response

const response = await client.cluster.putSettings({

persistent: {

"cluster.max_shards_per_node.frozen": 3200,

},

});

console.log(response);

PUT _cluster/settings

{

"persistent" : {

"cluster.max_shards_per_node.frozen": 3200

}

}

此增加应该只是临时的。作为长期解决方案,我们建议您向分片过多的数据层添加节点或 减少属于冻结层节点上的集群分片数量。要验证更改是否已解决问题,您可以通过检查 健康 API 的 data 部分来获取 shards_capacity 指示器的当前状态

resp = client.health_report(

feature="shards_capacity",

)

print(resp)

response = client.health_report( feature: 'shards_capacity' ) puts response

const response = await client.healthReport({

feature: "shards_capacity",

});

console.log(response);

GET _health_report/shards_capacity

响应将如下所示

{

"cluster_name": "...",

"indicators": {

"shards_capacity": {

"status": "green",

"symptom": "The cluster has enough room to add new shards.",

"details": {

"data": {

"max_shards_in_cluster": 1000

},

"frozen": {

"max_shards_in_cluster": 3200

}

}

}

}

}

当长期解决方案到位时,我们建议您重置 cluster.max_shards_per_node.frozen 限制。

resp = client.cluster.put_settings(

persistent={

"cluster.max_shards_per_node.frozen": None

},

)

print(resp)

response = client.cluster.put_settings(

body: {

persistent: {

'cluster.max_shards_per_node.frozen' => nil

}

}

)

puts response

const response = await client.cluster.putSettings({

persistent: {

"cluster.max_shards_per_node.frozen": null,

},

});

console.log(response);

PUT _cluster/settings

{

"persistent" : {

"cluster.max_shards_per_node.frozen": null

}

}