- Elasticsearch 指南其他版本

- 8.17 中的新功能

- Elasticsearch 基础

- 快速入门

- 设置 Elasticsearch

- 升级 Elasticsearch

- 索引模块

- 映射

- 文本分析

- 索引模板

- 数据流

- 摄取管道

- 别名

- 搜索您的数据

- 重新排名

- 查询 DSL

- 聚合

- 地理空间分析

- 连接器

- EQL

- ES|QL

- SQL

- 脚本

- 数据管理

- 自动缩放

- 监视集群

- 汇总或转换数据

- 设置高可用性集群

- 快照和还原

- 保护 Elastic Stack 的安全

- Watcher

- 命令行工具

- elasticsearch-certgen

- elasticsearch-certutil

- elasticsearch-create-enrollment-token

- elasticsearch-croneval

- elasticsearch-keystore

- elasticsearch-node

- elasticsearch-reconfigure-node

- elasticsearch-reset-password

- elasticsearch-saml-metadata

- elasticsearch-service-tokens

- elasticsearch-setup-passwords

- elasticsearch-shard

- elasticsearch-syskeygen

- elasticsearch-users

- 优化

- 故障排除

- 修复常见的集群问题

- 诊断未分配的分片

- 向系统中添加丢失的层

- 允许 Elasticsearch 在系统中分配数据

- 允许 Elasticsearch 分配索引

- 索引将索引分配过滤器与数据层节点角色混合,以在数据层之间移动

- 没有足够的节点来分配所有分片副本

- 单个节点上索引的分片总数已超过

- 每个节点的分片总数已达到

- 故障排除损坏

- 修复磁盘空间不足的数据节点

- 修复磁盘空间不足的主节点

- 修复磁盘空间不足的其他角色节点

- 启动索引生命周期管理

- 启动快照生命周期管理

- 从快照恢复

- 故障排除损坏的存储库

- 解决重复的快照策略失败问题

- 故障排除不稳定的集群

- 故障排除发现

- 故障排除监控

- 故障排除转换

- 故障排除 Watcher

- 故障排除搜索

- 故障排除分片容量健康问题

- 故障排除不平衡的集群

- 捕获诊断信息

- REST API

- API 约定

- 通用选项

- REST API 兼容性

- 自动缩放 API

- 行为分析 API

- 紧凑和对齐文本 (CAT) API

- 集群 API

- 跨集群复制 API

- 连接器 API

- 数据流 API

- 文档 API

- 丰富 API

- EQL API

- ES|QL API

- 功能 API

- Fleet API

- 图表探索 API

- 索引 API

- 别名是否存在

- 别名

- 分析

- 分析索引磁盘使用量

- 清除缓存

- 克隆索引

- 关闭索引

- 创建索引

- 创建或更新别名

- 创建或更新组件模板

- 创建或更新索引模板

- 创建或更新索引模板(旧版)

- 删除组件模板

- 删除悬挂索引

- 删除别名

- 删除索引

- 删除索引模板

- 删除索引模板(旧版)

- 存在

- 字段使用情况统计信息

- 刷新

- 强制合并

- 获取别名

- 获取组件模板

- 获取字段映射

- 获取索引

- 获取索引设置

- 获取索引模板

- 获取索引模板(旧版)

- 获取映射

- 导入悬挂索引

- 索引恢复

- 索引段

- 索引分片存储

- 索引统计信息

- 索引模板是否存在(旧版)

- 列出悬挂索引

- 打开索引

- 刷新

- 解析索引

- 解析集群

- 翻转

- 收缩索引

- 模拟索引

- 模拟模板

- 拆分索引

- 解冻索引

- 更新索引设置

- 更新映射

- 索引生命周期管理 API

- 推理 API

- 信息 API

- 摄取 API

- 许可 API

- Logstash API

- 机器学习 API

- 机器学习异常检测 API

- 机器学习数据帧分析 API

- 机器学习训练模型 API

- 迁移 API

- 节点生命周期 API

- 查询规则 API

- 重新加载搜索分析器 API

- 存储库计量 API

- 汇总 API

- 根 API

- 脚本 API

- 搜索 API

- 搜索应用程序 API

- 可搜索快照 API

- 安全 API

- 身份验证

- 更改密码

- 清除缓存

- 清除角色缓存

- 清除权限缓存

- 清除 API 密钥缓存

- 清除服务帐户令牌缓存

- 创建 API 密钥

- 创建或更新应用程序权限

- 创建或更新角色映射

- 创建或更新角色

- 批量创建或更新角色 API

- 批量删除角色 API

- 创建或更新用户

- 创建服务帐户令牌

- 委托 PKI 身份验证

- 删除应用程序权限

- 删除角色映射

- 删除角色

- 删除服务帐户令牌

- 删除用户

- 禁用用户

- 启用用户

- 注册 Kibana

- 注册节点

- 获取 API 密钥信息

- 获取应用程序权限

- 获取内置权限

- 获取角色映射

- 获取角色

- 查询角色

- 获取服务帐户

- 获取服务帐户凭据

- 获取安全设置

- 获取令牌

- 获取用户权限

- 获取用户

- 授予 API 密钥

- 具有权限

- 使 API 密钥失效

- 使令牌失效

- OpenID Connect 准备身份验证

- OpenID Connect 身份验证

- OpenID Connect 注销

- 查询 API 密钥信息

- 查询用户

- 更新 API 密钥

- 更新安全设置

- 批量更新 API 密钥

- SAML 准备身份验证

- SAML 身份验证

- SAML 注销

- SAML 失效

- SAML 完成注销

- SAML 服务提供商元数据

- SSL 证书

- 激活用户配置文件

- 禁用用户配置文件

- 启用用户配置文件

- 获取用户配置文件

- 建议用户配置文件

- 更新用户配置文件数据

- 具有用户配置文件权限

- 创建跨集群 API 密钥

- 更新跨集群 API 密钥

- 快照和还原 API

- 快照生命周期管理 API

- SQL API

- 同义词 API

- 文本结构 API

- 转换 API

- 使用情况 API

- Watcher API

- 定义

- 迁移指南

- 发行说明

- Elasticsearch 版本 8.17.0

- Elasticsearch 版本 8.16.1

- Elasticsearch 版本 8.16.0

- Elasticsearch 版本 8.15.5

- Elasticsearch 版本 8.15.4

- Elasticsearch 版本 8.15.3

- Elasticsearch 版本 8.15.2

- Elasticsearch 版本 8.15.1

- Elasticsearch 版本 8.15.0

- Elasticsearch 版本 8.14.3

- Elasticsearch 版本 8.14.2

- Elasticsearch 版本 8.14.1

- Elasticsearch 版本 8.14.0

- Elasticsearch 版本 8.13.4

- Elasticsearch 版本 8.13.3

- Elasticsearch 版本 8.13.2

- Elasticsearch 版本 8.13.1

- Elasticsearch 版本 8.13.0

- Elasticsearch 版本 8.12.2

- Elasticsearch 版本 8.12.1

- Elasticsearch 版本 8.12.0

- Elasticsearch 版本 8.11.4

- Elasticsearch 版本 8.11.3

- Elasticsearch 版本 8.11.2

- Elasticsearch 版本 8.11.1

- Elasticsearch 版本 8.11.0

- Elasticsearch 版本 8.10.4

- Elasticsearch 版本 8.10.3

- Elasticsearch 版本 8.10.2

- Elasticsearch 版本 8.10.1

- Elasticsearch 版本 8.10.0

- Elasticsearch 版本 8.9.2

- Elasticsearch 版本 8.9.1

- Elasticsearch 版本 8.9.0

- Elasticsearch 版本 8.8.2

- Elasticsearch 版本 8.8.1

- Elasticsearch 版本 8.8.0

- Elasticsearch 版本 8.7.1

- Elasticsearch 版本 8.7.0

- Elasticsearch 版本 8.6.2

- Elasticsearch 版本 8.6.1

- Elasticsearch 版本 8.6.0

- Elasticsearch 版本 8.5.3

- Elasticsearch 版本 8.5.2

- Elasticsearch 版本 8.5.1

- Elasticsearch 版本 8.5.0

- Elasticsearch 版本 8.4.3

- Elasticsearch 版本 8.4.2

- Elasticsearch 版本 8.4.1

- Elasticsearch 版本 8.4.0

- Elasticsearch 版本 8.3.3

- Elasticsearch 版本 8.3.2

- Elasticsearch 版本 8.3.1

- Elasticsearch 版本 8.3.0

- Elasticsearch 版本 8.2.3

- Elasticsearch 版本 8.2.2

- Elasticsearch 版本 8.2.1

- Elasticsearch 版本 8.2.0

- Elasticsearch 版本 8.1.3

- Elasticsearch 版本 8.1.2

- Elasticsearch 版本 8.1.1

- Elasticsearch 版本 8.1.0

- Elasticsearch 版本 8.0.1

- Elasticsearch 版本 8.0.0

- Elasticsearch 版本 8.0.0-rc2

- Elasticsearch 版本 8.0.0-rc1

- Elasticsearch 版本 8.0.0-beta1

- Elasticsearch 版本 8.0.0-alpha2

- Elasticsearch 版本 8.0.0-alpha1

- 依赖项和版本

教程:基于单向跨集群复制的灾难恢复

编辑教程:基于单向跨集群复制的灾难恢复

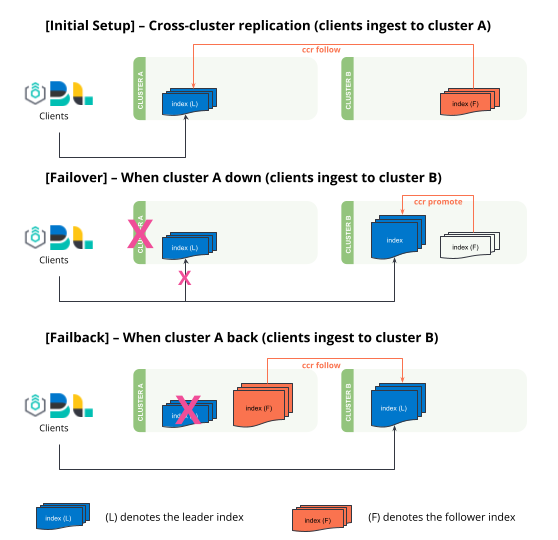

编辑了解如何在两个集群之间基于单向跨集群复制进行故障转移和故障恢复。您还可以访问 双向灾难恢复,设置可自动故障转移和故障恢复而无需人工干预的复制数据流。

- 设置从

clusterA复制到clusterB的单向跨集群复制。 - 故障转移 - 如果

clusterA脱机,clusterB需要将“追随者”索引“提升”为常规索引,以允许写入操作。所有摄取都需要重定向到clusterB,这由客户端(Logstash、Beats、Elastic Agents 等)控制。 - 故障恢复 - 当

clusterA重新联机时,它将承担追随者的角色,并从clusterB复制领导者索引。

跨集群复制仅提供复制用户生成索引的功能。跨集群复制并非旨在复制系统生成的索引或快照设置,并且无法跨集群复制 ILM 或 SLM 策略。请在跨集群复制 限制 中了解更多信息。

先决条件

编辑在完成本教程之前,请设置跨集群复制以连接两个集群并配置追随者索引。

在本教程中,kibana_sample_data_ecommerce 从 clusterA 复制到 clusterB。

resp = client.cluster.put_settings( persistent={ "cluster": { "remote": { "clusterA": { "mode": "proxy", "skip_unavailable": "true", "server_name": "clustera.es.region-a.gcp.elastic-cloud.com", "proxy_socket_connections": "18", "proxy_address": "clustera.es.region-a.gcp.elastic-cloud.com:9400" } } } }, ) print(resp)

response = client.cluster.put_settings( body: { persistent: { cluster: { remote: { "clusterA": { mode: 'proxy', skip_unavailable: 'true', server_name: 'clustera.es.region-a.gcp.elastic-cloud.com', proxy_socket_connections: '18', proxy_address: 'clustera.es.region-a.gcp.elastic-cloud.com:9400' } } } } } ) puts response

const response = await client.cluster.putSettings({ persistent: { cluster: { remote: { clusterA: { mode: "proxy", skip_unavailable: "true", server_name: "clustera.es.region-a.gcp.elastic-cloud.com", proxy_socket_connections: "18", proxy_address: "clustera.es.region-a.gcp.elastic-cloud.com:9400", }, }, }, }, }); console.log(response);

### On clusterB ### PUT _cluster/settings { "persistent": { "cluster": { "remote": { "clusterA": { "mode": "proxy", "skip_unavailable": "true", "server_name": "clustera.es.region-a.gcp.elastic-cloud.com", "proxy_socket_connections": "18", "proxy_address": "clustera.es.region-a.gcp.elastic-cloud.com:9400" } } } } }

resp = client.ccr.follow( index="kibana_sample_data_ecommerce2", wait_for_active_shards="1", remote_cluster="clusterA", leader_index="kibana_sample_data_ecommerce", ) print(resp)

const response = await client.ccr.follow({ index: "kibana_sample_data_ecommerce2", wait_for_active_shards: 1, remote_cluster: "clusterA", leader_index: "kibana_sample_data_ecommerce", }); console.log(response);

### On clusterB ### PUT /kibana_sample_data_ecommerce2/_ccr/follow?wait_for_active_shards=1 { "remote_cluster": "clusterA", "leader_index": "kibana_sample_data_ecommerce" }

写入(例如摄取或更新)应仅在领导者索引上发生。追随者索引是只读的,并将拒绝任何写入。

当 clusterA 宕机时的故障转移

编辑-

将

clusterB中的追随者索引提升为常规索引,以便它们接受写入。这可以通过以下方式实现:- 首先,暂停追随者索引的索引跟随。

- 接下来,关闭追随者索引。

- 取消跟随领导者索引。

- 最后,打开追随者索引(此时它是一个常规索引)。

resp = client.ccr.pause_follow( index="kibana_sample_data_ecommerce2", ) print(resp) resp1 = client.indices.close( index="kibana_sample_data_ecommerce2", ) print(resp1) resp2 = client.ccr.unfollow( index="kibana_sample_data_ecommerce2", ) print(resp2) resp3 = client.indices.open( index="kibana_sample_data_ecommerce2", ) print(resp3)

response = client.ccr.pause_follow( index: 'kibana_sample_data_ecommerce2' ) puts response response = client.indices.close( index: 'kibana_sample_data_ecommerce2' ) puts response response = client.ccr.unfollow( index: 'kibana_sample_data_ecommerce2' ) puts response response = client.indices.open( index: 'kibana_sample_data_ecommerce2' ) puts response

const response = await client.ccr.pauseFollow({ index: "kibana_sample_data_ecommerce2", }); console.log(response); const response1 = await client.indices.close({ index: "kibana_sample_data_ecommerce2", }); console.log(response1); const response2 = await client.ccr.unfollow({ index: "kibana_sample_data_ecommerce2", }); console.log(response2); const response3 = await client.indices.open({ index: "kibana_sample_data_ecommerce2", }); console.log(response3);

### On clusterB ### POST /kibana_sample_data_ecommerce2/_ccr/pause_follow POST /kibana_sample_data_ecommerce2/_close POST /kibana_sample_data_ecommerce2/_ccr/unfollow POST /kibana_sample_data_ecommerce2/_open

-

在客户端(Logstash、Beats、Elastic Agent)端,手动重新启用

kibana_sample_data_ecommerce2的摄取,并将流量重定向到clusterB。您还应在此期间将所有搜索流量重定向到clusterB集群。您可以通过将文档摄取到此索引来模拟此操作。您应该注意到此索引现在是可写的。resp = client.index( index="kibana_sample_data_ecommerce2", document={ "user": "kimchy" }, ) print(resp)

response = client.index( index: 'kibana_sample_data_ecommerce2', body: { user: 'kimchy' } ) puts response

const response = await client.index({ index: "kibana_sample_data_ecommerce2", document: { user: "kimchy", }, }); console.log(response);

### On clusterB ### POST kibana_sample_data_ecommerce2/_doc/ { "user": "kimchy" }

当 clusterA 恢复时的故障恢复

编辑当 clusterA 恢复时,clusterB 将成为新的领导者,而 clusterA 将成为追随者。

-

在

clusterA上设置远程集群clusterB。resp = client.cluster.put_settings( persistent={ "cluster": { "remote": { "clusterB": { "mode": "proxy", "skip_unavailable": "true", "server_name": "clusterb.es.region-b.gcp.elastic-cloud.com", "proxy_socket_connections": "18", "proxy_address": "clusterb.es.region-b.gcp.elastic-cloud.com:9400" } } } }, ) print(resp)

response = client.cluster.put_settings( body: { persistent: { cluster: { remote: { "clusterB": { mode: 'proxy', skip_unavailable: 'true', server_name: 'clusterb.es.region-b.gcp.elastic-cloud.com', proxy_socket_connections: '18', proxy_address: 'clusterb.es.region-b.gcp.elastic-cloud.com:9400' } } } } } ) puts response

const response = await client.cluster.putSettings({ persistent: { cluster: { remote: { clusterB: { mode: "proxy", skip_unavailable: "true", server_name: "clusterb.es.region-b.gcp.elastic-cloud.com", proxy_socket_connections: "18", proxy_address: "clusterb.es.region-b.gcp.elastic-cloud.com:9400", }, }, }, }, }); console.log(response);

### On clusterA ### PUT _cluster/settings { "persistent": { "cluster": { "remote": { "clusterB": { "mode": "proxy", "skip_unavailable": "true", "server_name": "clusterb.es.region-b.gcp.elastic-cloud.com", "proxy_socket_connections": "18", "proxy_address": "clusterb.es.region-b.gcp.elastic-cloud.com:9400" } } } } }

-

在可以将任何索引转换为追随者之前,需要丢弃现有数据。在删除

clusterA上的任何索引之前,请确保clusterB上提供了最新数据。resp = client.indices.delete( index="kibana_sample_data_ecommerce", ) print(resp)

response = client.indices.delete( index: 'kibana_sample_data_ecommerce' ) puts response

const response = await client.indices.delete({ index: "kibana_sample_data_ecommerce", }); console.log(response);

### On clusterA ### DELETE kibana_sample_data_ecommerce

-

在

clusterA上创建一个追随者索引,现在它跟随clusterB中的领导者索引。resp = client.ccr.follow( index="kibana_sample_data_ecommerce", wait_for_active_shards="1", remote_cluster="clusterB", leader_index="kibana_sample_data_ecommerce2", ) print(resp)

const response = await client.ccr.follow({ index: "kibana_sample_data_ecommerce", wait_for_active_shards: 1, remote_cluster: "clusterB", leader_index: "kibana_sample_data_ecommerce2", }); console.log(response);

### On clusterA ### PUT /kibana_sample_data_ecommerce/_ccr/follow?wait_for_active_shards=1 { "remote_cluster": "clusterB", "leader_index": "kibana_sample_data_ecommerce2" }

-

追随者集群上的索引现在包含更新的文档。

resp = client.search( index="kibana_sample_data_ecommerce", q="kimchy", ) print(resp)

response = client.search( index: 'kibana_sample_data_ecommerce', q: 'kimchy' ) puts response

const response = await client.search({ index: "kibana_sample_data_ecommerce", q: "kimchy", }); console.log(response);

### On clusterA ### GET kibana_sample_data_ecommerce/_search?q=kimchy

如果软删除在可以复制到追随者之前被合并掉,则以下过程将因领导者上的历史记录不完整而失败,有关详细信息,请参阅 index.soft_deletes.retention_lease.period。

On this page